All three major search engines have now released public APIs so users can more easily automate Web search queries without the need for page-scraping.

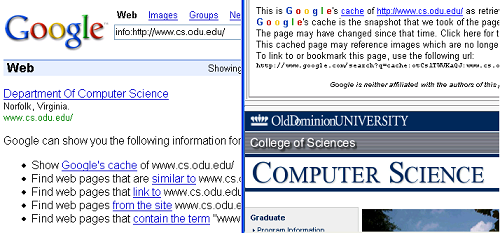

Google was the first to release an API in 2002. They required users to register for a license key which allowed them to make 1000 queries per day.

Yahoo was next on the scene (early 2005), and in an effort to out-do Google, they upped the number of daily queries to 5000 per IP address, a much more flexible arrangement than the Google limit that disregarded the IP address.

MSN finally came on board in late 2005 with a 10,000 daily limit per IP address.

All three services have a message board or forum where users can communicate with each other (and hopefully a representative from the service providers). In my experience, Yahoo does the best job at monitoring the forum (actually an e-mail list) and giving feedback.

I have recently used the Yahoo and MSN APIs to develop

Warrick, a tool for recovering websites that disappear due to a catastrophe. I was unable to use the Google API because of its restrictive nature (1000 daily queries). Had I used the API, users would have to sign up for a Google license before running Warrick, and I didn’t want every Warrick user to jump through that hoop. Yahoo and MSN’s more flexible query limits allowed me to use their APIs much more easily. I still limited my daily Google queries to 1000 to be polite and avoid getting my queries ignored.

Below is a comparison of the Google, Yahoo, and MSN Web search APIs that I have compiled. This may be useful for someone who is considering using the APIs.

Underlying technology:

G: SOAP

Y: REST

M: SOAP

Number of queries available per day:

G: 1000 per 24 hours per user ID

Y: 5000 per 24 hours per application ID per IP address

M: 10,000 per 24 hours per application ID per IP address

Getting started (examples that are supplied directly by the search engines):

G: Examples in Java and .NET

Y: Examples in Perl, PHP, Python, Java, JavaScript, Flash

M: Examples in .NET

Access to title, description, and cached URL?

G: Yes

Y: Yes

M: Yes

Access to last updated/crawled date?

G: No

Y: Last-Modified date if present

M: No

Access to images:

G: No

Y: Yes

M: No

Maximum number of results per query:

G: 10

Ouch! Y: 100

M: 50

Maximum number of results per query that can be obtained by "paging" through the results:

G: 1000

Y: 1000

M: 250